A few years ago, at a telecommunications company, a team of analysts discovered that more than 30% of their weekly reports were based on incorrect data. Poorly loaded dates, empty fields, duplicates and formatting errors were part of the daily routine. The consequence: wrong decisions, wasted time and a constant sense of distrust towards the data.

That case is no exception, it's the rule.

But something has started to change. And no, it's not just better governance or a new pipeline. It is artificial intelligence transforming the way we understand and manage data quality.

The silent dilemma: when data “looks good” but isn't

Most organizations experience a paradox: they have more data than ever, but less trust in it.

This is not a volume or display issue. It's a quality issue.

Small errors—such as empty fields, inconsistent formats, duplicates, or outliers— scale silently and end up affecting business decisions, regulations, and the customer experience.

The traditional solution: manual reviews, static rules, scheduled validations.

It works... until it stops working.

The new era of Data Quality: Beyond Static Rules

Traditionally, ensuring data quality involved defining manual rules:

- “If the X field is empty, mark as an error.”

- “If the data is less than 0, reject.”

- “If there is more than one record with the same ID, alert.”

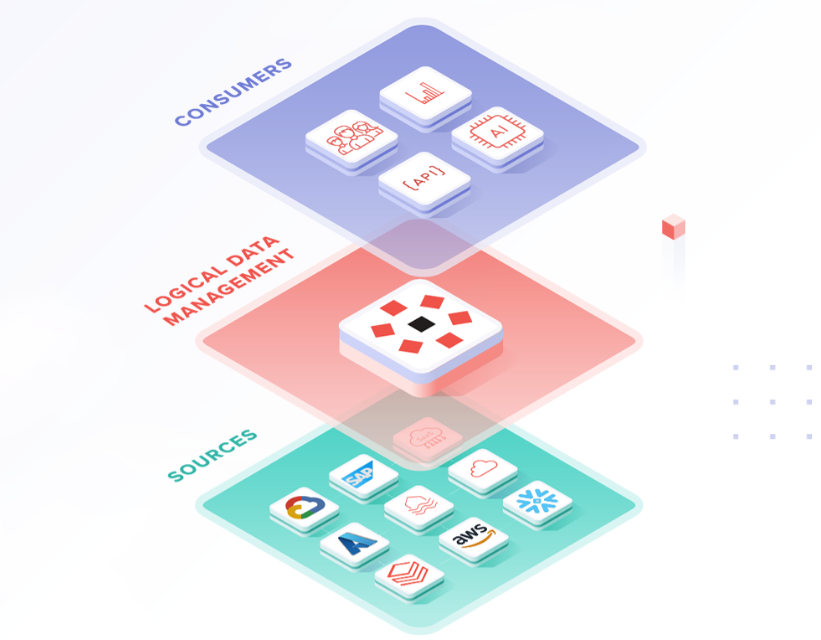

These rules are still useful, but today they are starting to fall short. Why? Because The Data Has Ceased to Be Static. They flow from multiple sources, with different frequencies and formats, and are constantly changing.

This is where AI comes into play.

What can AI do for the quality of your data?

Artificial intelligence doesn't replace rules, Amplify them. And it does so with capabilities that we could hardly achieve with traditional approaches:

1. Unsupervised anomaly detection

Using machine learning algorithms, AI can identify atypical behavior without having to tell it what to look for.

Example: Detect that a date of birth field has a peak of records with the year “1900”. You didn't program it, but she noticed it.

2. Predicting errors before they reach the user

Models can learn historical patterns and anticipate when data is likely to be corrupt or incomplete.

3. Automatic pattern-based correction

From intelligent imputation of missing values to contextual suggestions based on similar records, AI can make your data “correct itself”, under human supervision.

4. Continuous monitoring with real-time feedback

AI makes it possible to have systems that evolve with data. You no longer need to manually update the rules. If the data changes, the model adapts.

And the results? Real use cases

- Retail: 40% reduction in errors loading products into digital catalogs thanks to automatic validation with NLP.

- Bank: Identifying misclassified transactions that did not comply with regulations, before they reached the auditors.

- Education: Improved integration of student data from multiple platforms, with 25% fewer duplicate records.

But beware: AI is not magic

Implementing AI for data quality It's not plug and play. It requires:

- Un Data Foundation rugged.

- Model traceability and monitoring.

- Involving the data and business team.

The key is to understand that AI is no substitute for data strategy, power. He is a co-pilot who needs good fuel (data), a good map (processes) and a trained driver (your team).

Is your company ready to take the next step?

If you've already invested in integrations, visualization and governance, but you're still seeing reports with errors or data that “don't add up”, it's time to explore how Can artificial intelligence help you raise the quality of your data in a continuous and scalable way.

Because in a world governed by decisions based on data, quality is no longer a luxury: it is the basis of everything.